There is increasing scrutiny around the use of artificial intelligence (AI) and algorithms, along with growing awareness of how these technologies can amplify certain consumer risks.

Fairness requires handling personal data in ways that align with people’s reasonable expectations, avoiding actions that result in unjustified negative impacts on them. Using AI to process personal data in a manner that causes unjust discrimination among individuals directly violates the principle of fairness.

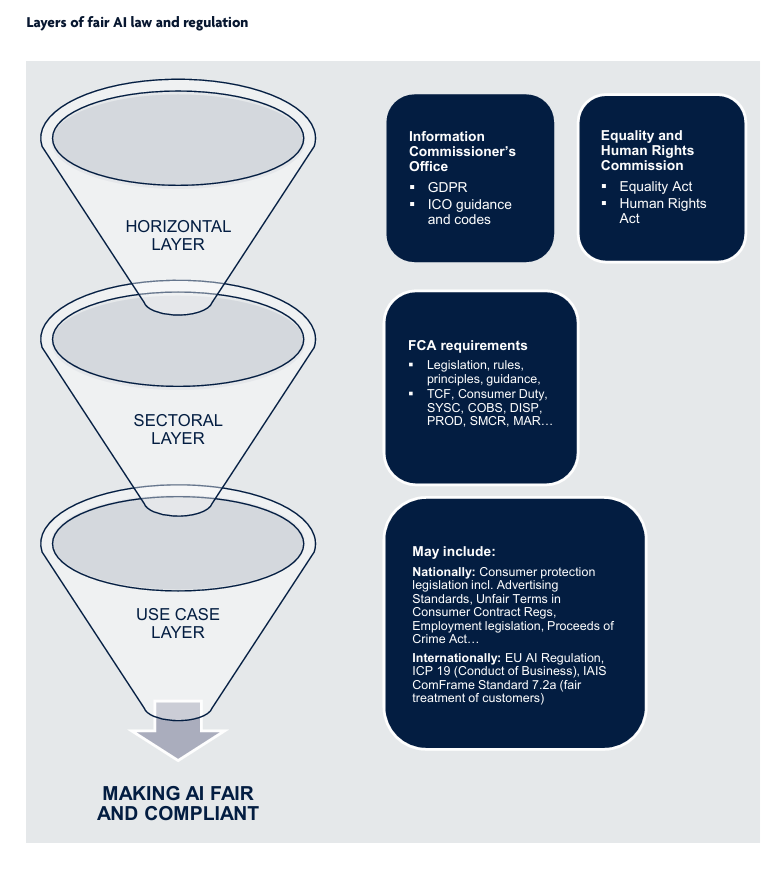

Legal Frameworks Governing AI Fairness

To address fairness, organizations must align with regional and global regulations that define the acceptable use of AI in various domains. Some of the legal frameworks include:

-

EU AI Act

- Defines risk-based classifications for AI systems, mandating transparency, accountability, and fairness for high-risk applications.

-

GDPR (General Data Protection Regulation)

- Focuses on data protection and privacy but also mandates that AI systems using personal data should be free from discriminatory outcomes.

-

U.S. Algorithmic Accountability Act

- Encourages organizations to perform impact assessments to evaluate the fairness, bias, and transparency of AI systems.

-

Sector-Specific Regulations

- Equal Credit Opportunity Act (ECOA) in finance, Health Insurance Portability and Accountability Act (HIPAA) in healthcare.

Source: UKFinance.org.uk

Ethical Principles for AI Fairness

Legal compliance provides a baseline, but ethical principles ensure organizations go beyond the minimum requirements to build trust and accountability:

-

Equity

- Ensure that AI systems do not disproportionately benefit or harm any particular group.

-

Transparency

- Foster understanding by making AI decision-making processes explainable to stakeholders.

-

Accountability

- Establish clear accountability mechanisms to address fairness violations.

-

Inclusivity

- Engage diverse stakeholders in the development and validation of AI systems.

Challenges in Ensuring AI Fairness Compliance

-

Dynamic Regulations

- Keeping up with evolving laws like the EU AI Act or sector-specific requirements can be challenging.

-

Conflict Between Fairness and Performance

- Mitigating fairness issues may reduce model accuracy.

-

Lack of Standardization

- No universal standard exists for fairness metrics or benchmarks.

Here are some resources to help you in address fairness issues.

AI Fairness – Legal and Ethical Compliance – £99

Empower your team to drive Responsible AI by fostering alignment with compliance needs and best practices.

![]() Practical, easy-to-use guidance from problem definition to model monitoring

Practical, easy-to-use guidance from problem definition to model monitoring ![]() Checklists for every phase in the AI/ ML pipeline

Checklists for every phase in the AI/ ML pipeline

AI Fairness Mitigation Package – £999

The ultimate resource for organisations ready to tackle bias at scale starting from problem definition through to model monitoring to drive responsible AI practices.

Customised AI Fairness Mitigation Package – £2499

Summary

Ensuring fairness in AI systems is a multifaceted challenge requiring legal compliance, ethical commitment, and practical implementation. Organizations must integrate fairness into their AI design and deployment workflows, aligning with regulations and ethical standards while addressing domain-specific needs. By adopting proactive measures, leveraging fairness tools, and fostering a culture of accountability, businesses can not only comply with legal requirements but also build trust with users, clients, and regulators.

Sources

DRCF, https://www.drcf.org.uk/publications/blogs/fairness-in-ai-a-view-from-the-drcf/

ICO, https://ico.org.uk/for-organisations/uk-gdpr-guidance-and-resources/artificial-intelligence/guidance-on-ai-and-data-protection/how-do-we-ensure-fairness-in-ai/what-about-fairness-bias-and-discrimination/#:~:text=Fairness%20means%20you%20should%20handle,will%20violate%20the%20fairness%20principle.

https://artificialintelligenceact.eu/

UKFinance.org, FAIR USE OF AI. A UK FINANCE WHITEPAPER, https://www.ukfinance.org.uk/system/files/2022-06/AI%20fairness%20in%20financial%20services_FINAL.pdf