The EU AI Act, which came into effect on February 2, 2025, introduces strict regulations on artificial intelligence systems, with a particular focus on prohibiting certain AI practices deemed harmful or abusive As organisations navigate this new landscape, understanding these prohibitions and developing AI literacy are crucial steps.

According to the European Commission’s guidelines, “Article 5 AI Act prohibits the placing on the EU market, putting into service, or use of certain AI systems for manipulative, exploitative, social control or surveillance practices, which by their inherent nature violate fundamental rights and Union values. Recital 28 AI Act clarifies that such practices are particularly harmful and abusive and should be prohibited because they contradict the Union values of respect for human dignity, freedom, equality, democracy, and the rule of law, as well as fundamental rights.”

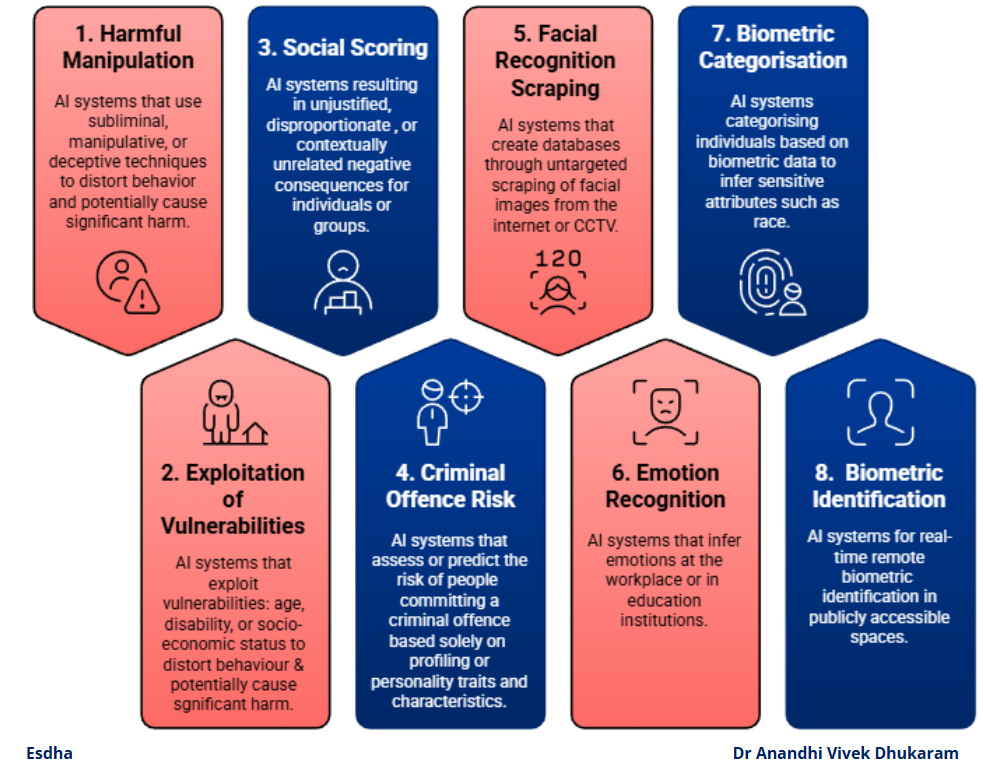

Overview of Prohibitions listed in Article 5 AI Act

Article 5(1)(a) Harmful manipulation, and deception : AI systems that deploy subliminal techniques beyond a person’s consciousness or purposefully manipulative or deceptive techniques, with the objective or with the effect of distorting behaviour, causing or reasonably likely to cause significant harm.

Article 5(1)(b) Harmful exploitation of vulnerabilities: AI systems that exploit vulnerabilities due to age, disability or a specific social or economic situation, with the objective or with the effect of distorting behaviour, causing or reasonably likely to cause significant harm.

Article 5(1)(c) Social Scoring: AI systems that evaluate or classify natural persons or groups of persons based on social behaviour or personal or personality characteristics, with the social score leading to detrimental or unfavourable treatment when data comes from unrelated social contexts or such treatment is unjustified or disproportionate to the social behaviour.

Article 5(1)(d) Individual criminal offence risk assessment and prediction: AI systems that assess or predict the risk of people committing a criminal offence based solely on profiling or personality traits and characteristics; except to support a human assessment based on objective and verifiable facts directly linked to a criminal activity.

Article 5(1)(e) Untargeted scraping to develop facial recognition databases: AI systems that create or expand facial recognition databases through untargeted scraping of facial images from the internet or closed-circuit television (‘CCTV’) footage.

Article 5(1)(f) Emotion recognition: AI systems that infer emotions at the workplace or in education institutions; except for medical or safety reasons

Article 5(1)(g) Biometric categorisation: AI systems that categorise people based on their biometric data to deduce or infer their race, political opinions, trade union membership, religious or philosophical beliefs, sex-life or sexual orientation; except for labelling or filtering of lawfully acquired biometric datasets, including in the area of law

enforcement.

(h) Real-time remote biometric identification (‘RBI’): AI systems for real-time remote biometric identification in publicly accessible spaces for the purposes of law enforcement; except if necessary for the targeted search of specific victims, the prevention of specific threats including terrorist attacks, or the search of suspects of specific offences (further procedural requirements, including for authorisation, outlined in Article 5(2-7) AI Act).

Penalties for non-compliance can reach up to €35 million or 7% of global annual turnover, whichever is higher

Next Steps

To ensure compliance and harness the benefits of AI responsibly, organizations should focus on:

- AI Literacy Programs: We can implement comprehensive training to equip staff with essential knowledge about AI systems, their capabilities, and limitations.

- Workshops: We conduct interactive sessions to explore AI’s impact on specific industries and roles, fostering a deeper understanding of AI applications.

- Consulting Services: Engage with our AI experts to assess current practices, identify potential risks, and develop strategies for responsible AI implementation.

By prioritising AI literacy and seeking expert guidance, organisations can navigate the complexities of the EU AI Act while driving innovation and maintaining ethical standards in AI development and deployment.

Free AI Literacy Resources

Sampling Bias in Machine Learning

Social Bias in Machine Learning

Representation Bias in Machine Learning

3-4 Hours EU AI ACT WORKSHOP FOR TECHNICAL COMPLIANCE (up to 10 members) – £2999

Our interactive workshop provides practical guidance on compliance, risk assessment, and responsible AI implementation. Learn from experts, collaborate with peers, and gain the knowledge you need to navigate the evolving AI regulatory landscape.

3-4 Hours EU AI ACT AI Literacy Training (up to 20 members) – £2999

Our introductory AI literacy training programs equip your workforce with the knowledge they need to make informed decisions about AI. From foundational concepts to ethical considerations, we’ll help your team become AI literate.

Customised Ethical AI Framework Development

Customised Risk Management Framework Implementation

EU AI ACT Technical Compliance Consulting